I’ve arrived!

After some hiccups with API, and my shoes setting off the metal detector causing me to have a full body scan et al. by the security guards, the flight here was relatively uneventful thankfully. SwissAir provided free drinks and lemon cake inflight, which was unexpected, but welcome.

The sight of the Alps as the plane was coming in to land in Zurich reminded me how much I love being in the mountains. Although Zurich is not really in the Alps, it’s not too far away, and just the overall feel of Switzerland (France too, but I’m not in France) is really relaxing and calming.

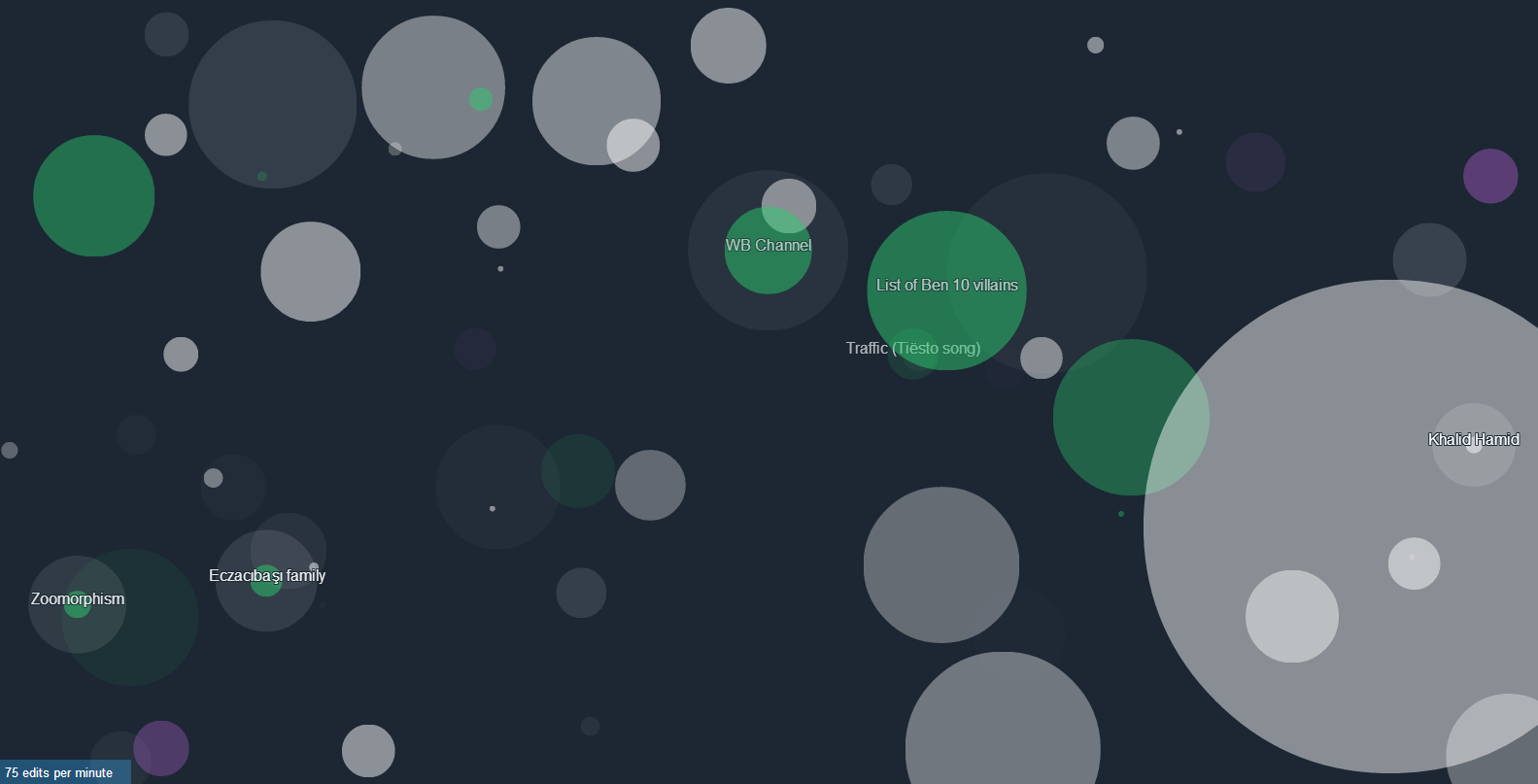

I’m quite glad to be back on the continent again, it’s just a completely different atmosphere. Unfortunately, I’m not going to be able to get out and explore Zurich much while I’m here, as I have a lot of coding to do for the Wikimedia Hackathon!

It’s good to see faces again I’ve not seen for a year – and also to put faces to names I didn’t meet last year in Amsterdam. While the Hackathon hasn’t officially started yet, a fair few people are already getting down to work, including myself. I’ve been making sure that the code I’m going to be working on is in a fit state to have new features added to it, so making sure I’m not building new features on top of existing bugs. I’m also attempting to replicate my working copy of the code that’s sat on my desktop machine onto my laptop – I thought I had all the pieces I needed, then I remembered how long it has been since I last did software development on my laptop!

Power initially proved to be problematic too, while I thought I had a Swiss power adaptor, all of the adaptors I had were in fact only European ones. A mad dash around the shops before I left proved fruitless, so I had to buy one in the airport. UK and EU plugs being larger than Swiss plugs, and with the elegance of the Swiss sockets allowing three plugs to be inserted in a space only slightly larger than the UK and EU plugs means that when an adaptor is plugged directly into a Swiss socket, the ability to use the other two Swiss sockets is severely limited. Thankfully, a fair few people (myself included) have brought extension leads (mainly European), which others can plug into without problems.

The main aims for my time here are to get a framework for supporting OAuth within the account creation tool, allowing us to replace a few key pieces of functionality for which the implementation is not ideal. Firstly, I want to replace our confirmation of on-wiki username, then work towards implementing the welcomer bot to use the account creator’s actual account, rather than faking their identity with a bot. Lastly, if we can allow the account creation tool to actually create the accounts itself too, so we don’t need to redirect to the creation web form, it would be awesome.

I’m hoping to get through as much of that list as possible, with assistance from the development team of the extension, but overall I’m aiming to get far enough with a working framework so that I can complete the rest without assistance. If I can also complete some of the outstanding issues that the extension has too, and learn more about MediaWiki in the process, it will be even better.